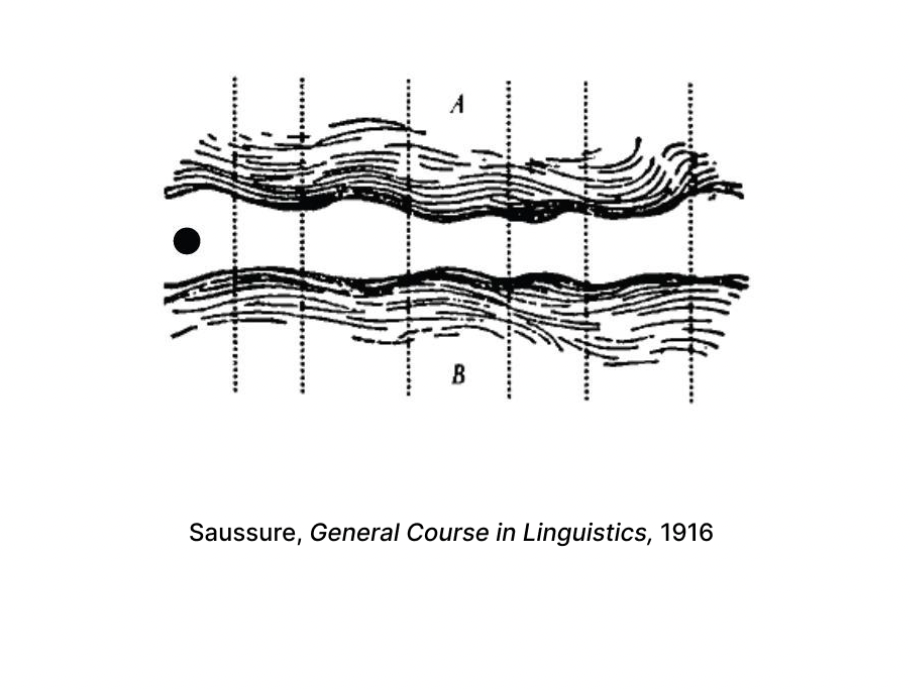

Trying to trace the cause of AI hallucinations, or rather, when Large Language Models (LLM) provide a factually incorrect response, led us down the path of examining the second L in LLMs– language. The early linguist Saussure described a rift between our thoughts and how we communicate them through a series of abstractions.

How can you contextualize this gap in the realm of AI?

To be on the nose– you have to physically navigate the gap in language to contextualize it.

Your partner in speech is obscured. Your speech itself is taken from you and transcribed. Unaware of how to navigate this formerly familiar idea, agreeing upon meaning and having interpretation delivered to you by a projection feels foreign. Do you question the text you end up with? Do you feel provoked or scammed by the gibberish? It is your job to derive meaning from what you created

Interface made with javascript/html/css, interactive and connected to a Midiboard

Together with Stephan Sinn and Viktor Bredal